How faithful and trustworthy are neuron explanations in mechanistic interpretability?

Understanding what individual units in a neural network represent is a cornerstone of mechanistic interpretability. A common approach is to generate human-friendly text explanations for each neuron to describe their functionalities—but how can we trust that these explanations are faithful reflections of the model's actual behavior?

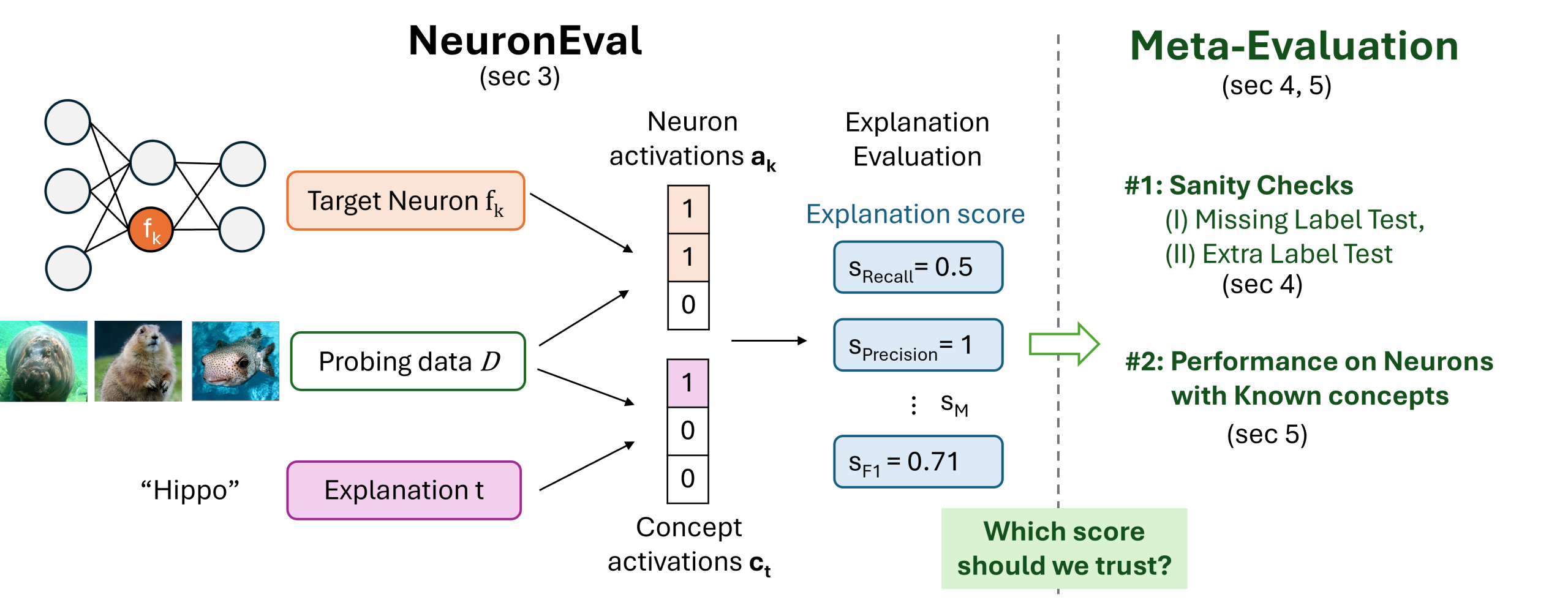

In work published in the 2025 International Conference on Machine Learning (ICML'25), TILOS affiliate faculty member Lily Weng and graduate students Tuomas Oikarinen and Ge Yan introduce a unified mathematical framework, NeuronEval, that brings together many existing neuron explanation evaluation methods. This framework not only clarifies how different metrics relate, but also enables rigorous analysis of evaluation pipelines.

The team further proposes two simple but powerful sanity checks for explanation metrics—and reveal that many widely used metrics fail these tests. Their findings lead to practical guidelines for trustworthy evaluation and highlight a set of metrics that pass these tests. This work establishes a foundation for more principled and reliable assessment of interpretability methods going forward. See the ICML'25 paper Evaluating Neuron Explanations: A Unified Framework with Sanity Checks and accompanying project page.